Comparing appearance-based controllers

for nonholonomic navigation

from a visual memory

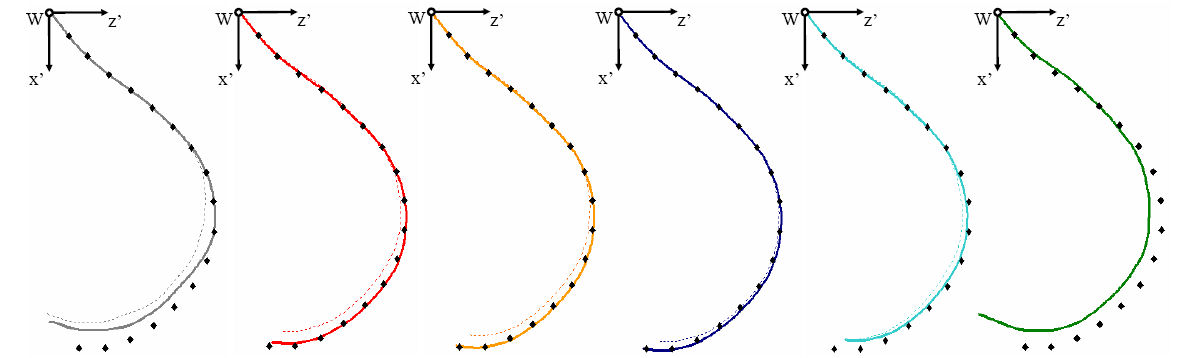

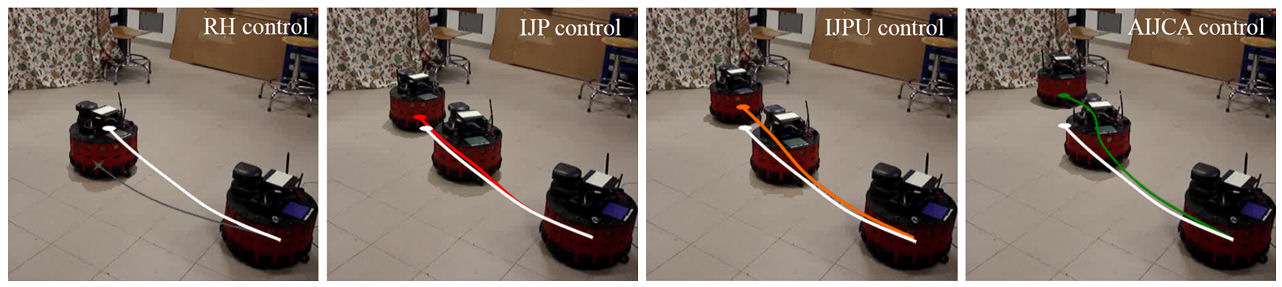

Our visual navigation framework relies on a monocular camera, and the navigation path is represented as a series of reference images, acquired during a preliminary teaching phase. We have focused on six selected controllers for replaying the taught path: one pose-based controller (RH), and five image-based (IJP, IJPU, IJC, IJCU, AIJCA). These controllers have been evaluated and compared in various experiments. Experimental results, in a simulated environment, as well as on a real robot, show that the two image jacobian controllers that exploit the epipolar geometry to estimate feature depth (IJP and IJC), outperform the four other controllers, both in the pose and in the image space. However, we also show that image jacobian controllers, that use uniform and constant feature depths (IJPU and IJCU), prove to be effective alternatives, whenever sensor calibration or pose estimation are inaccurate.

In all 6 control schemes, we set constant linear velocity and apply a nonlinear feedback, based on the visible feature points, to the robot angular velocity. The performance of the controllers is validated and compared by simulations and experiments on a unicycle-like robot equipped with a pinhole camera.

This work has been developed by A. Cherubini, M. Colafrancesco, G. Oriolo, L. Freda, and F. Chaumette and submitted to the ICRA09 "Workshop on Safe navigation in open and dynamic environments - Application to autonomous vehicles". Refer to this paper for more details.

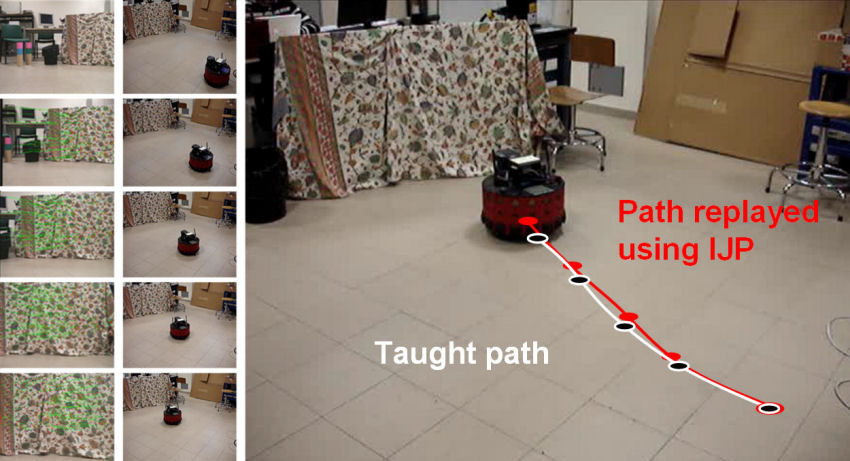

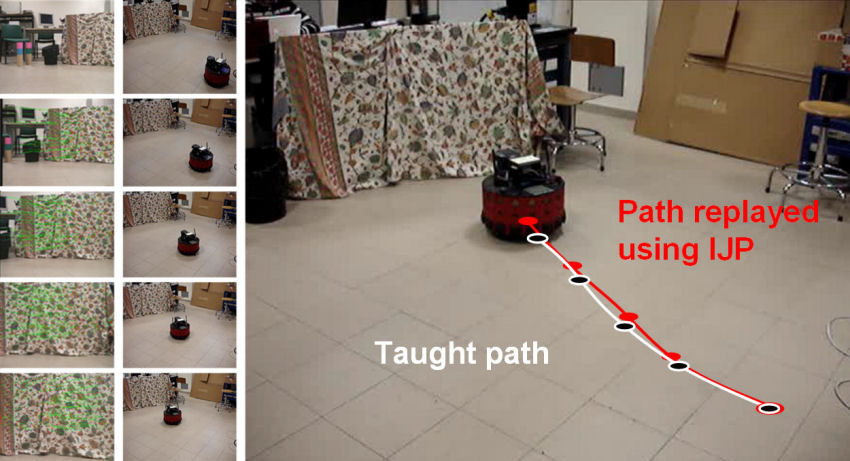

In this clip, the path replayed by the best appearance-based controller (IJP) is compared to the taught path.

The other clips show experiments where the same path is replayed using: the IJPU, AIJCA, and RH appearance-based controllers. When replaying with RH, the controller fails after having reached the second image